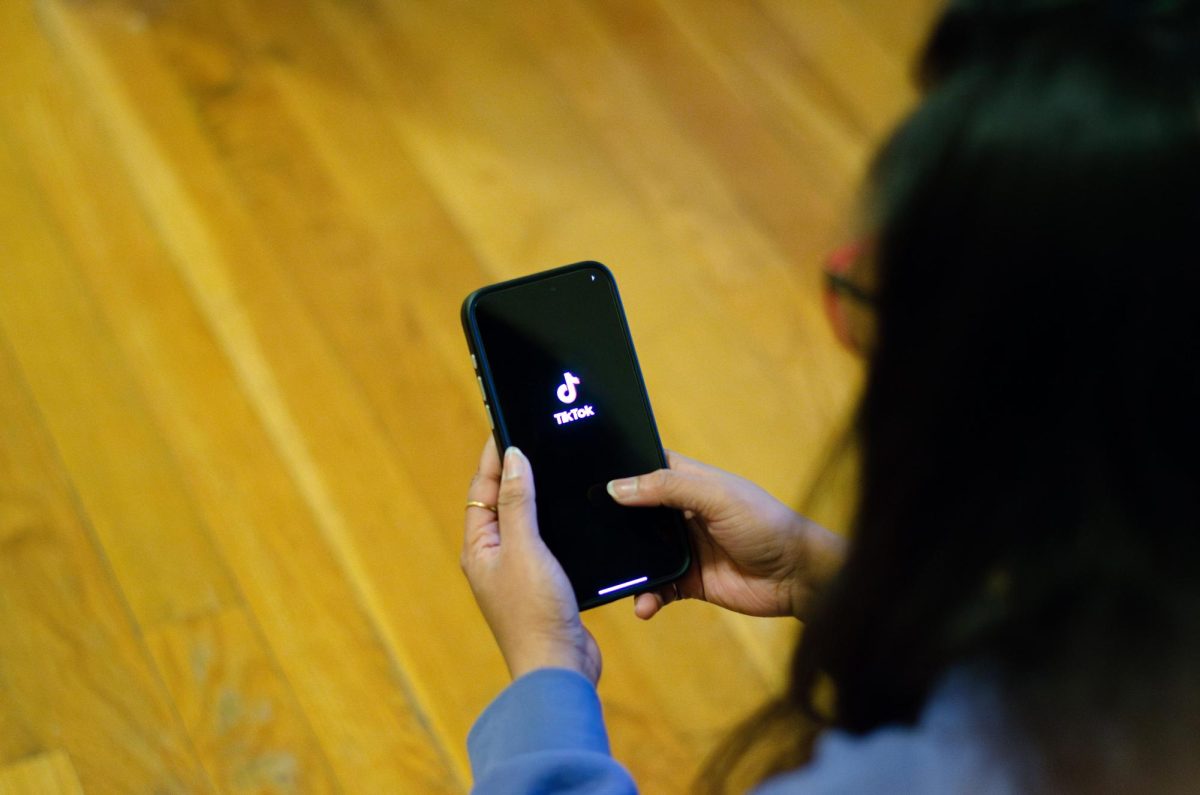

Every time any innovative technology makes its way into the mainstream, without fail, everyone comes out of the woodwork to fearmonger about automation and “kids these days.” People did it with the internet, with TVs and computers, with dial-up phones, and people are doing it again with Artificial Intelligence. Ignoring any issues of copyright legality, most arguments are uninformed at best and ignorant at worst.

Discourse surrounding AI is fundamentally flawed because many people completely misunderstand what AI programs like ChatGPT actually do. AI programs are not search engines, and they don’t “come up” with any responses; they’re generative algorithms that filter and refine huge databases of information into easily digestible chunks. In other words, AI basically compiles sentences and concepts that it reads from other websites, like Wikipedia. It doesn’t generate any ideas by itself.

These programs do sometimes generate brand-new information—that is, fake information. Artificial Intelligence “hallucinations” are caused by the program receiving information and making, effectively, an educated guess about what its response should be. However, the AI doesn’t “understand” anything that it says. Artificial Intelligence programs string together words based on what words are most likely to be seen together, and in what order. If the words “sound” correct to the AI, then it has no way of checking if the sentence is true.

Hallucinations make these programs intrinsically unreliable as writing tools. AI programs have utility as aggregators, but there has to be someone at every step double-checking that what it produces is real. So, if these programs are so demonstrably inconsistent, how are some students able to use them to write essays and pass classes?

The answer might lie not with AI technology, but with professors and the institution of academia, at large. Ostensibly, the goal of universities is to provide a higher-level education that fosters learning while allowing students to narrow their interests. In practice, academia has historically been a way to restrict higher-paying jobs to only those with the money to afford college. Although public universities have curbed this issue, thanks especially to pushes from the Civil Rights movement in the 1950s, the reality for a lot of people is that degrees are completely inaccessible.

Inevitably, this structure means a significant portion of any given student’s college career is busywork. Be honest: Did you learn anything from that two-page paper you had to write in English, or did you zone out and write what you knew your teacher wanted to hear? If the goal of these classes truly is learning, then maybe they need to be more rigorous; the sign of a good class is what the student retains for years afterwards, not how much homework they were saddled with in the moment. Classes where ChatGPT can author every essay should not be graduation requirements.

Arguments against AI from a plagiarism perspective similarly fall through. That’s not to say that AI cannot be used to plagiarize something. Some AI programs flagrantly boast that they can copy any artist’s style or mimic any writer; using these programs to pass yourself off as another creator is in line with classic definitions of plagiarism. However, the idea that any use of AI programs at all is plagiarism is objectively incorrect.

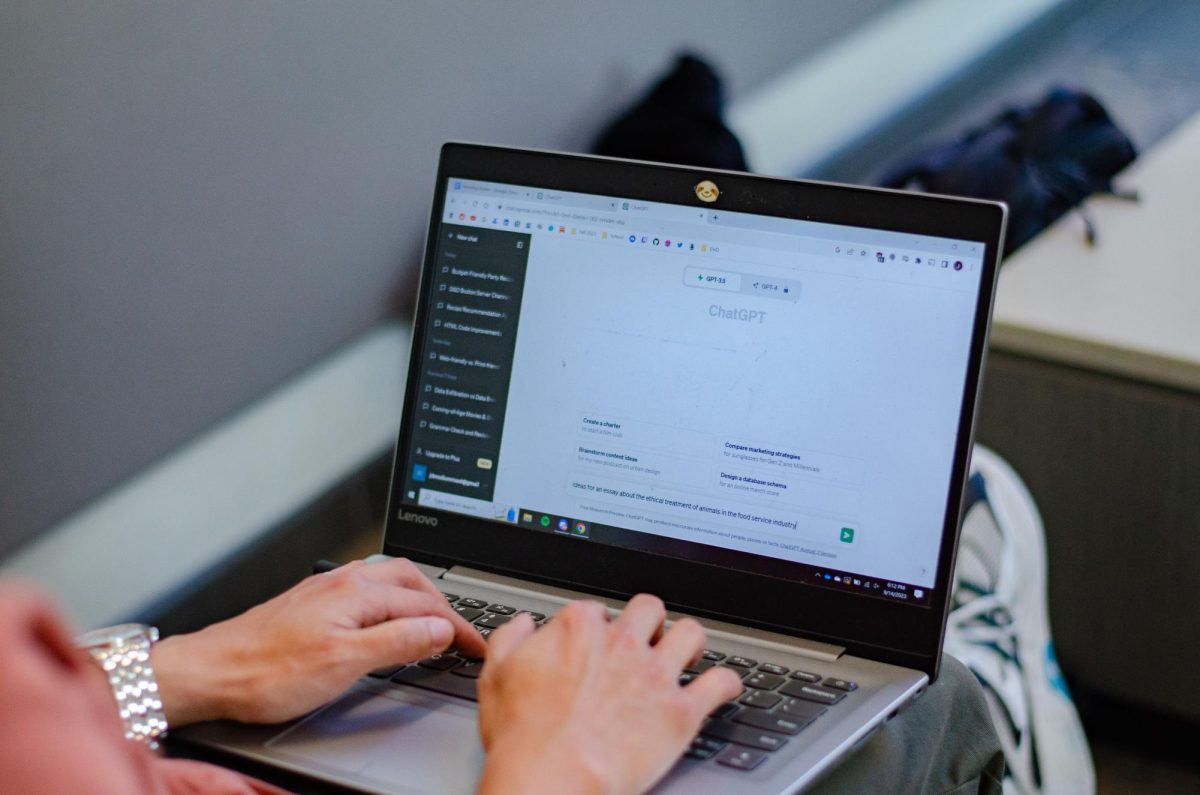

Automation only becomes an issue when it replaces workers; that is, AI is only problematic when, instead of performing menial tasks, it’s used to completely take over someone’s job. This was brought to the forefront of the AI debate this summer, when Writers Guild of America strikers argued that media corporations would use AI to replace writers. AI could be used to streamline these jobs, but plots written entirely by an algorithm have the potential to completely upheave hundreds of artists’ lives. Other industries are encountering similar problems as big tech corporations like Google roll out dozens of AI programs.

Artificial intelligence is a tool that can be extremely powerful when used correctly. It’s not the downfall of humanity, but it’s also not going to be the way of the future. More likely, it’s going to go the way of NFTs, cryptocurrency and every other modern attempt to revolutionize the way we use the internet. As it stands, AI is riddled with technological and social problems, and if it doesn’t become obsolete in the next few years, it will become another mundane aspect of bureaucratic life.